Table of Contents

Deploying of General Parallel File System (GPFS)

Introduction.

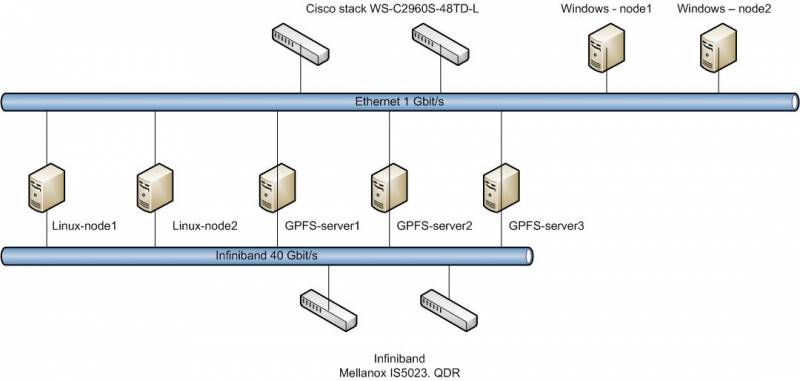

This article is exploring the process of creation falt-tollerant storage based on GPFS v3.5 and with volume of 90 Tb. File system was deployed on three Linux RedHat servers with local disks. Linux (Centos) and Windows 2008R2 nodes were used like clients. Windows clients connected with Ethernet 1Gbit/s. BackEnd - Infiniband RDMA

At one fine day online-service geo-data kosmosnimki.ru had exhaust the volume of EMC ISILON X200. Problem of reduction of budget demanded to find cheaper solution for data storing. (Estimated cost 1Tb data storing on EMC ISILON X200 is about 4k$. Also the cost of support is 25 k$/year, Price of solution was 150 k$ for 45 Tb on SATA disks)

Storing feature of service kosmosnimki is large number of files (before 300000) in one directory. Average file size is ~80 MB.

Also, the new task of deploying Active/Passive Postgresql cluster for service osm was appeared. Shared storage should be on SSD for great i/o and seek operations.

GPFS was chosen to comply to all features of services

GPFS is distributed file system. GPFS features are:

- separated storing of data and meta-data

- replication of data and meta-data

- snapshots

- qoutes

- ACL (posix/NFSv4)

- storing data policy (then more the one pool exist)

- using Linux, UNIX AIX and windows base nodes

- using RDMA/Infiniband (only for linux)

GPFS arhitecture.

GPFS is using folloing stuctures of data:

- inodes

- indirect blocks

- data blocks

Inodes и indirect block are meta-date. Data blocks is a data.

Inodes of small files and directories also include data. Indirect blocks are using for storing big files.

Data block consist of 32 subblocks. Chose data block size as 32*(block size of phisical disk or stripe of RAID volume) at the creation of file system.

Remember , HDD disks size more then 2TB have block size 4k, SSD disk page size is 8k.

GPFS consist on:

- storage pools - pools of disks or RAID volumes

Disk and RAID volumes showed like Network Shared Disk - NSD. NSD can be as local installed disks/RAID volumes as a external network storages.

- policies - policy of data (files) storing or migrating

- filesets - provide a method for partitioning a file system and allow administrative operations at a

finer granularity than the entire file system (For example if you need to have different snapshots for every pool.)

Replication feature is setting at the time of file system creation. Also this setting can be changed. Copy of data/meta-data placed on different failure group.

Value of failure group is setting at NSD creation.

Client realized of creation of N-replic at the time of writing. Also there is way to manual recover default data replicas numbers. Thus the speed of writing data will be (available bandwidth)/(N-replicas number )

Requirements and Calculations .

Requirements:

- High speed of random read/write

- Volume of disks pool 90 TB

- Volume of SSD pool 1.4 ТB

- Write speed for SSD pool - 1.7 GB/s .

- Storing cost of 1GB no more than 1.2k$

It is given that:

- Average file size on disks pool - 50 МB

- Average file size on ssd pool - 1 МB

Calculations:

Calculation of storage size for meta-data volume:

- Vhdd - Data volume of HDD (bytes)

- Vssd - Data volume of SSD (bytes)

- Shdd - Average file size on HDD (bytes)

- Sssd - Average file size on SSD (bytes)

- Sinode - Inode sizes

- Ksub - Subjective empirical coefficient considering a possible quantity of directories and very small files

- Kext - Scaling coefficient (without of adding disks for meta-fata)

- Vmeta - Volume of meta-data

Vmeta = ( Vhdd * Ksub /Shdd + Vssd * Ksub /Sssd) * Sinode * Kext

Vmeta = ( 90×1012 * 8 / 50×106 + 1.4×1012 * 8 / 1×106 )* 4096 * 3 = ~320 GB.

For small values of seek times will be used SSD (RAID 1).

For reliability reasons replicas number will be - 2 .

Scheme and Equipments.

Scheme

Each of gpfs-nodeX or linux-nodeX are connected to each of Ethernet/Linux switches. Bonding is used for connecting to Ethernet switches.

Equipments

Calculations below…

Infeniband Switches(2) - Infiniband Mallanox IS5023

Ethernet Switches (2) - Cisco WS-C2960S-48TD-L + stack module

gpfs-nodeX servers (3):

| Type | Model | Quantity |

| Chassis | Supermicro SC846TQ-R900B | 1 |

| MB | Supermicro X9SRL-F | 1 |

| CPU | Intel® Xeon® Processor E5-2640 | 1 |

| MEM | KVR13LR9D4/16HM | 4 |

| Controller | Adaptec RAID 72405 | 1 |

| LAN | Intel <E1G44ET2> Gigabit Adapter Quad Port (OEM) PCI-E x4 10 / 100 / 1000Mbps | 1 |

| Infiniband | Mallanox MHQH29C-XTR | 1 |

| IB cables | MC2206310-003 | 2 |

| System HDD | 600 Gb SAS 2.0 Seagate Cheetah 15K.7 < ST3600057SS> 3.5“ 15000rpm 16Mb | 2 |

| SSD | 240 Gb SATA 6Gb / s OCZ Vertex 3 <VTX3-25SAT3-240G> 2.5” MLC+3.5“ adapter | 6 |

| HDD | 4Tb SATA 6Gb / s Hitachi Ultrastar 7K4000 <HUS724040ALE640> 3.5” 7200rpm 64Mb | 16 |

Manual for calculation of PVU values

Cost of equipment 3 servers licence and 5 client licens IBM GPFS was ~100 k$

Cost of 1 TB data storing 1.11 k$.

Configuration

Shortly about initial configuration of RedHat/Centos on gpfs-nodeX and linux-nodeX nodes/

1. (gpfs-nodeX) SAS disks was used for system partition. Two volumes of RAID1 and 20 single volume were created on RAID controller. Requre to turn off read/write cache for all volume there will be used for GPFS. With tune on cache we explore the losing of NSD with losing of the data after reboot node.

yum groupinstall "Infiniband Support" yum groupinstall "Development tools" yum install bc tcl tk tcsh mc ksh libaio ntp ntpdate openssh-clients wget tar net-tools rdma opensm man infiniband-diags chkconfig rdma on chkconfig opensm on

2. All ethernet ports in bond (mode 5)

3. Passwordless access between nodes for user root. The key shoud be created on one node and then copy to other to /root/.ssh/.

ssh-keygen -t dsa (creation of passwordless key) cd /root/.ssh cat id_dsa.pub >> authorized_keys chown root.root authorized_keys chmod 600 authorized_keys echo "StrictHostKeyChecking no" > config

4. Don't forget to write all names hosts in /etc/hosts.

5. Checking of /usr/lib64/libibverbs.so

if not

ln -s /usr/lib64/libibverbs.so.1.0.0 /usr/lib64/libibverbs.so

6. Configure ntpd on all nodes

7. IPTABLES

Allow ports sshd and GPFS. (using official documentation IBM)

8. (Only for GPFS-nodeX) Install tuned with latency-performance profile

yum install tuned tuned-adm profile latency-performance

9. Configuration of sysctl.conf

net.ipv4.tcp_timestamps=0 net.ipv4.tcp_sack=0 net.core.netdev_max_backlog=250000 net.core.rmem_max=16777216 net.core.wmem_max=16777216 net.core.rmem_default=16777216 net.core.wmem_default=16777216 net.core.optmem_max=16777216 net.ipv4.tcp_mem=16777216 16777216 16777216 net.ipv4.tcp_rmem=4096 87380 16777216 net.ipv4.tcp_wmem=4096 65536 16777216

10. Add max open files to /etc/security/limits.conf (depends on your requirements)

- hard nofile 1000000

- soft nofile 1000000

Shorty about initial configuration of Windows 2008 R2 on windows-nodeX nodes

We spent 3 month for installing GPFS on windows nodes before new release patch was issued. This work was done by my college Dmitry Pugachev

1. Intalling SUA

Utilities and SDK for Subsystem for UNIX-based Applications_AMD64.exe - сайта. Manual there

Step 2 doesn't required with GPFS version >= 3.5.0.11. GPFS >= 3.5.0.11 includes OpenSSH

2. Installing OpenSSH components

- pkg-current-bootstrap60x64.exe and pkg-current-bundlecomplete60x64.exe , are available on сайте

- don't forget to set “StrictModes no” for ssh

/etc/init.d/sshd stop /etc/init.d/sshd start

3. Windows6.1-KB2639164-x64.msu - update for Windows Server 2008 R2 SP1, that removing problems with GPFS

4. Creation of user root

- Home dirrectory must be C:\root in profile settings.

- Add user to Admistrations group

- Copy ssh keys to .ssh directory.

- Allow enter as a service in local p[olicy.

5. Firewall и UAC

- disable UAC

- open firewall or SSH and GPFS ports

GPFS installation

REDHAT/CENTOS

1. Installation

./gpfs_install-3.5.0-0_x86_64 --text-only cd /usr/lpp/mmfs/3.5/ rpm -ivh *.rpm

2. Updates installation (copy to the directory before to start installing)

cd /usr/lpp/mmfs/3.5/update rpm -Uvh *.rpm

3. Kernel module compilation

cd /usr/lpp/mmfs/src && make Autoconfig && make World && make rpm rpm -ivh ~/rpmbuild/RPMS/x86_64/gpfs.gplbin*

for Centos

cd /usr/lpp/mmfs/src && make LINUX_DISTRIBUTION=REDHAT_AS_LINUX Autoconfig && make World && make rpm rpm -ivh ~/rpmbuild/RPMS/x86_64/gpfs.gplbin*

4. Set paths

echo 'export PATH=$PATH:/usr/lpp/mmfs/bin' > /etc/profile.d/gpfs.sh export PATH=$PATH:/usr/lpp/mmfs/bin

WINDOWS

1. gpfs-3.5-Windows-license.msi - license component

2. gpfs-3.5.0.10-WindowsServer2008.msi - last GPFS update. Update are avalible on this page

GPFS was installed

Cluster configuration

Configuration must be carried out on one node. Quorum is used for reliability of cluster

1. File creation with the description of nodes.

vi /root/gpfs_create

gpfs-node1:quorum-manager

gpfs-node2:quorum-manager gpfs-node3:quorum-manager linux-node1:client linux-node2:client windows-node1:client windows-node2:client

2. Cluster creation.

mmcrcluster -N /root/gpfs_create -p gpfs-node1 -s gpfs-node2 -r /usr/bin/ssh -R /usr/bin/scp -C gpfsstorage0

checking

mmlscluster

applying licenses

mmchlicense server --accept -N gpfs-node1,gpfs-node2,gpfs-node3 mmchlicense client --accept -N linux-node1,linux-node2,windows-node1,windows-node2

checking licenses

mmlslicence -L

3. NSD creation

5 pools was created on cluster. System, postgres and 3 pool for reliability (Eight is the maximum number of pools per one file system). Data and meta-data was store separately.

NSD description file creation.

Format: block dev:node name::type of data:failure group number:pools name: block dev - sd* type of data - metadataOnly,dataOnly,dataAndMetadata

Each node is failure group .

cat /root/nsd_creation sdb:gpfs-node1::metadataOnly:3101:node1GPFSMETA:: sdc:gpfs-node1::dataOnly:3101:node1SSD240GNSD01:poolpostgres: sdd:gpfs-node1::dataOnly:3101:node1SSD240GNSD02:poolpostgres: sde:gpfs-node1::dataOnly:3101:node1SSD240GNSD03:poolpostgres: sdf:gpfs-node1::dataOnly:3101:node1SSD240GNSD04:poolpostgres: sdg:gpfs-node1::dataOnly:3101:node1HDD4TNSD01:: sdh:gpfs-node1::dataOnly:3101:node1HDD4TNSD02:: sdi:gpfs-node1::dataOnly:3101:node1HDD4TNSD03:: sdj:gpfs-node1::dataOnly:3101:node1HDD4TNSD04:: sdk:gpfs-node1::dataOnly:3101:node1HDD4TNSD05:pool1: sdl:gpfs-node1::dataOnly:3101:node1HDD4TNSD06:pool1: sdm:gpfs-node1::dataOnly:3101:node1HDD4TNSD07:pool1: sdn:gpfs-node1::dataOnly:3101:node1HDD4TNSD08:pool1: sdo:gpfs-node1::dataOnly:3101:node1HDD4TNSD09:pool2: sdp:gpfs-node1::dataOnly:3101:node1HDD4TNSD10:pool2: sdq:gpfs-node1::dataOnly:3101:node1HDD4TNSD11:pool2: sdr:gpfs-node1::dataOnly:3101:node1HDD4TNSD12:pool2: sds:gpfs-node1::dataOnly:3101:node1HDD4TNSD13:pool3: sdt:gpfs-node1::dataOnly:3101:node1HDD4TNSD14:pool3: sdv:gpfs-node1::dataOnly:3101:node1HDD4TNSD15:pool3: sdu:gpfs-node1::dataOnly:3101:node1HDD4TNSD16:pool3: sdb:gpfs-node2::metadataOnly:3201:node2GPFSMETA:: sdc:gpfs-node2::dataOnly:3201:node2SSD240GNSD01:poolpostgres: sdd:gpfs-node2::dataOnly:3201:node2SSD240GNSD02:poolpostgres: sde:gpfs-node2::dataOnly:3201:node2SSD240GNSD03:poolpostgres: sdf:gpfs-node2::dataOnly:3201:node2SSD240GNSD04:poolpostgres: sdg:gpfs-node2::dataOnly:3201:node2HDD4TNSD01:: sdh:gpfs-node2::dataOnly:3201:node2HDD4TNSD02:: sdi:gpfs-node2::dataOnly:3201:node2HDD4TNSD03:: sdj:gpfs-node2::dataOnly:3201:node2HDD4TNSD04:: sdk:gpfs-node2::dataOnly:3201:node2HDD4TNSD05:pool1: sdl:gpfs-node2::dataOnly:3201:node2HDD4TNSD06:pool1: sdm:gpfs-node2::dataOnly:3201:node2HDD4TNSD07:pool1: sdn:gpfs-node2::dataOnly:3201:node2HDD4TNSD08:pool1: sdo:gpfs-node2::dataOnly:3201:node2HDD4TNSD09:pool2: sdp:gpfs-node2::dataOnly:3201:node2HDD4TNSD10:pool2: sdq:gpfs-node2::dataOnly:3201:node2HDD4TNSD11:pool2: sdr:gpfs-node2::dataOnly:3201:node2HDD4TNSD12:pool2: sds:gpfs-node2::dataOnly:3201:node2HDD4TNSD13:pool3: sdt:gpfs-node2::dataOnly:3201:node2HDD4TNSD14:pool3: sdv:gpfs-node2::dataOnly:3201:node2HDD4TNSD15:pool3: sdu:gpfs-node2::dataOnly:3201:node2HDD4TNSD16:pool3: sdb:gpfs-node3::metadataOnly:3301:node3GPFSMETA:: sdc:gpfs-node3::dataOnly:3301:node3SSD240GNSD01:poolpostgres: sdd:gpfs-node3::dataOnly:3301:node3SSD240GNSD02:poolpostgres: sde:gpfs-node3::dataOnly:3301:node3SSD240GNSD03:poolpostgres: sdf:gpfs-node3::dataOnly:3301:node3SSD240GNSD04:poolpostgres: sdg:gpfs-node3::dataOnly:3301:node3HDD4TNSD01:: sdh:gpfs-node3::dataOnly:3301:node3HDD4TNSD02:: sdi:gpfs-node3::dataOnly:3301:node3HDD4TNSD03:: sdj:gpfs-node3::dataOnly:3301:node3HDD4TNSD04:: sdk:gpfs-node3::dataOnly:3301:node3HDD4TNSD05:pool1: sdl:gpfs-node3::dataOnly:3301:node3HDD4TNSD06:pool1: sdm:gpfs-node3::dataOnly:3301:node3HDD4TNSD07:pool1: sdn:gpfs-node3::dataOnly:3301:node3HDD4TNSD08:pool1: sdo:gpfs-node3::dataOnly:3301:node3HDD4TNSD09:pool2: sdp:gpfs-node3::dataOnly:3301:node3HDD4TNSD10:pool2: sdq:gpfs-node3::dataOnly:3301:node3HDD4TNSD11:pool2: sdr:gpfs-node3::dataOnly:3301:node3HDD4TNSD12:pool2: sds:gpfs-node3::dataOnly:3301:node3HDD4TNSD13:pool3: sdt:gpfs-node3::dataOnly:3301:node3HDD4TNSD14:pool3: sdv:gpfs-node3::dataOnly:3301:node3HDD4TNSD15:pool3: sdu:gpfs-node3::dataOnly:3301:node3HDD4TNSD16:pool3:

4. Configuration and startup

For using RDMA in gpfs

mmchconfig verbsRdma=enable

mmchconfig verbsPorts=“mlx4_0/1 mlx4_0/2” -N gpfs-node1,gpfs-node2,gpfs-node3,linux-node1,linux-node2

Performance tuning

mmchconfig maxMBpS=16000 (default value for GPFS 3.5 - 2048)

mmchconfig pagepool=32000M (cache size on a client or server that used like client)

Starting GPFS. (on all nodes)

mmstartup -a

To check.

mmgetstate -a

Node number Node name GPFS state

------------------------------------------

1 gpfs-node1 active

2 gpfs-node2 active

3 gpfs-node3 active

5 linux-node1 active

7 linux-node2 active

8 windows-node1 active

9 windows-node2 active

Checking of correctly working of RDMA (not for Windows nodes):

cat /var/adm/ras/mmfs.log.latest

Thu Jul 4 21:14:49.084 2013: mmfsd initializing. {Version: 3.5.0.9 Built: Mar 28 2013 20:10:14} ...

Thu Jul 4 21:14:51.132 2013: VERBS RDMA starting.

Thu Jul 4 21:14:51.133 2013: VERBS RDMA library libibverbs.so (version >= 1.1) loaded and initialized.

Thu Jul 4 21:14:51.225 2013: VERBS RDMA device mlx4_0 port 1 opened.

Thu Jul 4 21:14:51.226 2013: VERBS RDMA device mlx4_0 port 2 opened.

Thu Jul 4 21:14:51.227 2013: VERBS RDMA started.

File system creation, file placement policies , acl NFSv4 access lists and snapshots

1. Creation of file system

mmcrfs /gpfsbig /dev/gpfsbig -F /root/nsd_creation -A yes -B 256K -Q no -v yes -r 2 -R 2 -m 2 -M 2

2. Placement polices

For ballancing between system and pools 1-4 was used RAND funcation. Function RAND has nonlinear distribution in difference from C-function random()

The results were received by test below.

Writing of 6000 files were used for testing of placement distribution.

Attention: RAND policy doesn't work with windows server/client. All data will be stored to default pool

Testing policy below.

mmlspolicy gpfsbig -L RULE 'LIST_1' LIST 'allfiles' FROM POOL 'system' RULE 'LIST_2' LIST 'allfiles' FROM POOL 'pool1' RULE 'LIST_3' LIST 'allfiles' FROM POOL 'pool2' RULE 'LIST_4' LIST 'allfiles' FROM POOL 'pool3' RULE 'to_pool1' SET POOL 'pool2' LIMIT(99) WHERE INTEGER(RAND()*100)<24 RULE 'to_pool2' SET POOL 'pool3' LIMIT(99) WHERE INTEGER(RAND()*100)<34 RULE 'to_pool3' SET POOL 'pool4' LIMIT(99) WHERE INTEGER(RAND()*100)<50 RULE DEFAULT SET POOL 'system'

Two results of tests

1.

Rule# Hit_Cnt KB_Hit Chosen KB_Chosen KB_Ill Rule 0 1476 0 1476 0 0 RULE 'LIST_1' LIST 'allfiles' FROM POOL 'system' 1 1436 0 1436 0 0 RULE 'LIST_2' LIST 'allfiles' FROM POOL 'pool1' 2 1581 0 1581 0 0 RULE 'LIST_3' LIST 'allfiles' FROM POOL 'pool2' 3 1507 0 1507 0 0 RULE 'LIST_4' LIST 'allfiles' FROM POOL 'pool3'

2.

0 1446 0 1446 0 0 RULE 'LIST_1' LIST 'allfiles' FROM POOL 'system' 1 1524 0 1524 0 0 RULE 'LIST_2' LIST 'allfiles' FROM POOL 'pool1' 2 1574 0 1574 0 0 RULE 'LIST_3' LIST 'allfiles' FROM POOL 'pool2' 3 1456 0 1456 0 0 RULE 'LIST_4' LIST 'allfiles' FROM POOL 'pool3'

And final policy.

cat /root/policy RULE 'ssd' SET POOL 'poolpostges' WHERE USER_ID = 26 RULE 'to_pool1' SET POOL 'pool1' LIMIT(99) WHERE INTEGER(RAND()*100)<24 RULE 'to_pool2' SET POOL 'pool2' LIMIT(99) WHERE INTEGER(RAND()*100)<34 RULE 'to_pool3' SET POOL 'pool3' LIMIT(99) WHERE INTEGER(RAND()*100)<50 RULE DEFAULT SET POOL 'system'

Testing policies

mmchpolicy gpfsbig /root/policy -I test

Installing policies

mmchpolicy gpfsbig /root/policy -I yes

Checking policies

mmlspolicy gpfsbig -L

3. ACL NFSv4

The best way to set up NFSv4 acl is using windows machine.

The first of all you are need to create directory of file with permissions of 777 and root:root and then to change permissions from windows.

mmgetacl /gpfsbig/Departments/planning/ #NFSv4 ACL #owner:root #group:root #ACL flags: # DACL_PRESENT # DACL_AUTO_INHERITED special:owner@:rwxc:allow (X)READ/LIST (X)WRITE/CREATE (X)MKDIR (X)SYNCHRONIZE (X)READ_ACL (X)READ_ATTR (X)READ_NAMED (-)DELETE (X)DELETE_CHILD (X)CHOWN (X)EXEC/SEARCH (X)WRITE_ACL (X)WRITE_ATTR (X)WRITE_NAMED

special:group@:r-x-:allow (X)READ/LIST (-)WRITE/CREATE (-)MKDIR (X)SYNCHRONIZE (X)READ_ACL (X)READ_ATTR (X)READ_NAMED (-)DELETE (-)DELETE_CHILD (-)CHOWN (X)EXEC/SEARCH (-)WRITE_ACL (-)WRITE_ATTR (-)WRITE_NAMED

group:SCANEX\dg_itengineer:rwxc:allow:FileInherit:DirInherit (X)READ/LIST (X)WRITE/CREATE (X)MKDIR (X)SYNCHRONIZE (X)READ_ACL (X)READ_ATTR (X)READ_NAMED (X)DELETE (X)DELETE_CHILD (X)CHOWN (X)EXEC/SEARCH (X)WRITE_ACL (X)WRITE_ATTR (X)WRITE_NAMED

4. Snapshots

The script below will be create snapshot and delete snapshot with 30 days age or older. Script is stating every day with cron on gpfs-node1.

cat /scripts/gpfs_snap_cr #!/bin/sh num=`/bin/date "+%Y%m%d"` nums=`/bin/date "+%s"` numms=`expr $nums - 2592000` numm=`/bin/date --date=@$numms "+%Y%m%d"` /usr/lpp/mmfs/bin/mmcrsnapshot gpfsbig gpfsbig$num /usr/lpp/mmfs/bin/mmdelsnapshot gpfsbig gpfsbig$numm

Mounting

mmmount gpfsbig

On Linux-nodes /gpfsbig

On Windows-node manually mount

mmmount gpfsbig Y

Testing

Script below was used for HDD write i/o tests.

#!/bin/sh k=1 if [ $k -le 2 ]; then count=30000 fi if [[ $k -le 6 ] && [ $k -gt 1 ]]; then count=10000 fi if [ $k -gt 5 ]; then count=3000 fi for ((i=1; i<=$k; i++ )) do dd if=/dev/zero of=/gpfsbig/tests/test$k$i.iso bs=1M count=$count &>/gpfsbig/tests/$k$i & done

Scripts below was used for HDD read i/o tests.

#!/bin/sh k=1 if [ $k -le 2 ]; then count=30000 fi if [[ $k -le 6 ] && [ $k -gt 1 ]]; then count=10000 fi if [ $k -gt 5 ]; then count=3000 fi for ((i=1; i<=$k; i++ )) do dd if=/gpfsbig/tests/test$k$i.iso of=/dev/null bs=1M count=$count &>/gpfsbig/tests/rd$k$i & done

Were using next k-values 1,2,4,8,16,32,64

one file average write speed (k=1) - 190 MB/s

one file average write speed (k=2) - 176 MB/s

one file average write speed (k=4) - 130 MB/s

one file average write speed (k=8) - 55.3 MB/s

one file average write speed (k=16) - 20.5 MB/s

one file average write speed (k=32) - 15.7 MB/s

one file average write speed (k=64) - 8.2 MB/s

one file average read speed (k=1) - 290 MB/s

one file average read speed (k=2) - 227 MB/s

one file average read speed (k=4) - 186 MB/s

one file average read speed (k=8) - 76 MB/s

one file average read speed (k=16) - 32 MB/s

one file average read speed (k=32) - 20.7 MB/s

one file average read speed (k=64) - 11.9MB/s

The same scripts ware used for SSD pool testing.

one file average write speed (k=1) - 1.7 GB/s

one file average write speed (k=2) - 930 MB/s

one file average write speed (k=4) - 449 MB/s

one file average write speed (k=8) - 235 MB/s

one file average write speed (k=16) - 112 MB/s

one file average write speed (k=32) - 50 MB/s

one file average write speed (k=64) - 21 MB/s

one file average read speed (k=1) - 3.6 GB/s

one file average read speed (k=2) - 1.8 GB/s

one file average read speed (k=4) - 889 MB/s

one file average read speed (k=8) - 446 MB/s

one file average read speed (k=16) - 222 MB/s

one file average read speed (k=32) - 110 MB/s

one file average read speed (k=64) - 55 MB/s

As a fact one big HDD pool was faster at small k-values. But probability of losing data is higher.

Some words about smb share.

There are two cases to share storage with smb:

- Windows

- Linux + Samba

Windows is preferred way to share storage with GPFS NFS4 ACL. But windows gpfs required to use smb v1 only. See link

Linux smb is faster. But , if you consider to use GPFS NFS4 ACL you can faced with many problems with ACLs (by our experience).

Conclusion.

GPFS is very productive file system. It satisfied the all spent efforts. For GPFS licens cost optimization should used E5-2643 processor

Use processor Intel® Xeon® Processor E5-2609 if you will not going to use storing nodes as a client (NFS,SAMBA or etc).

Enjoy.

About author

Profile of the author