Table of Contents

Spectrum Scale (GPFS) with HA SMB

Introduction.

This article is exploring the process of creating falt-tollerant file storage with HA smb service based on Spectrum Scale (GPFS) v4.2. File system was deployed on three Linux CentOS 7 servers with local disks. Servers connected with 2 ports Ethernet 10Gbit/s. All packages were installed manually like in 3.5 GPFS version. Manually installation was chosen because we are using Spacewalk and Puppet for deploiment.

Scheme and Equipments.

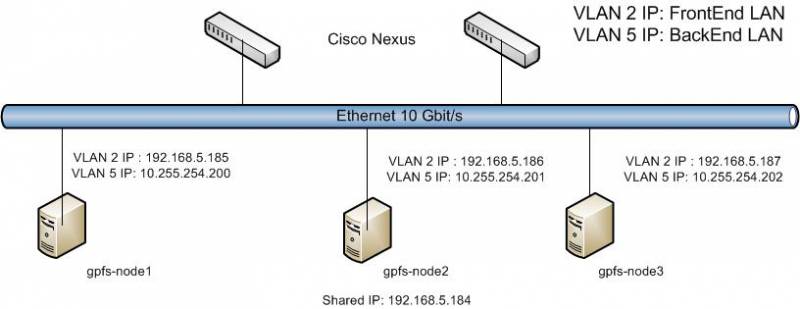

Scheme

Each of gpfs-nodeX to each of Ethernet/Linux switches. Bonding lacp is used for connecting to Ethernet switches. Switches configured with vPC.

To prevent of problems in cases of taking one of Frontend servers IP by other devices BackEnd LAN was configured.

Equipments

| Type | Model | Quantity |

| Chassis | Supermicro SC846TQ-R900B | 1 |

| CPU | Intel® Xeon® Processor CPU E5620 | 2 |

| MEM | 64GB*/32 | 1 |

| Controller | Adaptec | 1 |

*only for gpfs-node1-2

Preparing

Shortly about initial configuration of CentOS 7 on gpfs-nodeX nodes

1. (gpfs-nodeX) two disks was used for system partition.

One volumes of RAID1 and 22 single volumes were created on RAID controller.

Requre to turn off read/write cache for all volume there will be used for GPFS. With tune on cache we explore the losing of NSD with losing of the data after reboot node.

Installing following packets on every of nodes:

yum install bison byacc cscope kernel-devel boost-devel boost-regexp \ ksh rpm-sign ctags cvs diffstat doxygen flex gcc gcc-c++ gcc-gfortran \ gettext git indent intltool libtool zlib-devel libuuid-devel bc tcl tk \ tcsh mc ksh libaio ntp ntpdate openssh-clients wget tar net-tools \ patch patchutils rcs rpm-build subversion swig systemtap dkms -y

2. All ethernet ports in bond (mode 4)

3. Passwordless access between nodes for user root.

The key shoud be created on one node and then copy to other to /root/.ssh/.

ssh-keygen -t dsa (creation of passwordless key) cd /root/.ssh cat id_dsa.pub >> authorized_keys chown root.root authorized_keys chmod 600 authorized_keys echo "StrictHostKeyChecking no" > config

4. Don't forget to write all names hosts in /etc/hosts.

10.255.254.200 gpfs-node1 10.255.254.201 gpfs-node2 10.255.254.202 gpfs-node3 192.168.5.185 gpfs-node1.example.com 192.168.5.186 gpfs-node2.example.com 192.168.5.187 gpfs-node3.example.com 192.168.5.184 gpfs-share 192.168.5.184 gpfs-share.example.com

5. Configure ntpd on all nodes

6. FIREWALL

Allow ports sshd and Specrum Scale GPFS. (using official documentation IBM)

7. Configuration of sysctl.conf

net.ipv4.tcp_timestamps=0 net.ipv4.tcp_sack=0 net.core.netdev_max_backlog=250000 net.core.rmem_max=16777216 net.core.wmem_max=16777216 net.core.rmem_default=16777216 net.core.wmem_default=16777216 net.core.optmem_max=16777216 net.ipv4.tcp_mem=16777216 16777216 16777216 net.ipv4.tcp_rmem=4096 87380 16777216 net.ipv4.tcp_wmem=4096 65536 16777216

8. Add max open files to /etc/security/limits.conf (depends on your requirements)

- hard nofile 1000000

- soft nofile 1000000

9. Disable Selinux

Spectrum Scale GPFS 4.2 installation

Requires to use standard protocol or advanced protocol edition.

All following should be complete for every of nodes.

1. Installation

Extract software and start to install.

./Spectrum_Scale_Protocols_Advanced-4.2.0.0-x86_64-Linux-install --text-only

cd /usr/lpp/mmfs/4.2.0.0/gpfs_rpms/ rpm -ivh gpfs.base*.rpm gpfs.gpl*rpm gpfs.gskit*rpm gpfs.msg*rpm gpfs.ext*rpm gpfs.adv*rpm

2. Updates installation (4.2.0 version has bug)

./Spectrum_Scale_Protocols_Advanced-4.2.0.2-x86_64-Linux-install --text-only cd /usr/lpp/mmfs/4.2.0.2/gpfs_rpms/ rpm -Uvh gpfs.base*.rpm gpfs.gpl*rpm gpfs.gskit*rpm gpfs.msg*rpm gpfs.ext*rpm gpfs.adv*rpm

3. Kernel module compilation

cd /usr/lpp/mmfs/src && make LINUX_DISTRIBUTION=REDHAT_AS_LINUX Autoconfig && make World && make rpm rpm -ivh ~/rpmbuild/RPMS/x86_64/gpfs.gplbin*

4. Set up paths

echo 'export PATH=$PATH:/usr/lpp/mmfs/bin' > /etc/profile.d/gpfs.sh export PATH=$PATH:/usr/lpp/mmfs/bin

GPFS was successfully installed.

Cluster configuration

Configuration must be carried out on one of nodes.

Quorum is used for reliability of cluster

1. File creation with the description of nodes.

cat /root/gpfs_create gpfs-node1:quorum-manager gpfs-node2:quorum-manager gpfs-node3:quorum-manager

2. Creating of cluster.

mmcrcluster -N /root/gpfs_create -p gpfs-node1 -s gpfs-node2 -r /usr/bin/ssh -R /usr/bin/scp -C gpfsstorage0

checking

mmlscluster

applying licenses

mmchlicense server --accept -N gpfs-node1,gpfs-node2,gpfs-node3

checking licenses

mmlslicence -L

3. Creating NSD.

5 pools were created on cluster. System and 4 pool for reliability (Eight is the maximum number of pools per one file system). Data and meta-data were storing on pool system.

NSD description file creation.

Format: block dev:node name::type of data:failure group number:pools name: block dev - sd* type of data - metadataOnly,dataOnly,dataAndMetadata

Each node is failure group .

cat /root/nsd_creation sdb:gpfs-node1::dataAndMetadata:7101:node1NSD3THDD1:: sdc:gpfs-node1::dataAndMetadata:7101:node1NSD3THDD2:: sdd:gpfs-node1::dataAndMetadata:7101:node1NSD3THDD3:: sde:gpfs-node1::dataAndMetadata:7101:node1NSD3THDD4:: sdf:gpfs-node1::dataAndMetadata:7101:node1NSD3THDD5:: sdg:gpfs-node1::dataAndMetadata:7101:node1NSD3THDD6:: sdh:gpfs-node1::dataOnly:7101:node1NSD3THDD7:pool1 sdi:gpfs-node1::dataOnly:7101:node1NSD3THDD8:pool1 sdj:gpfs-node1::dataOnly:7101:node1NSD3THDD9:pool1 sdk:gpfs-node1::dataOnly:7101:node1NSD3THDD10:pool1 sdl:gpfs-node1::dataOnly:7101:node1NSD3THDD11:pool2 sdm:gpfs-node1::dataOnly:7101:node1NSD3THDD12:pool2 sdn:gpfs-node1::dataOnly:7101:node1NSD3THDD13:pool2 sdo:gpfs-node1::dataOnly:7101:node1NSD3THDD14:pool2 sdp:gpfs-node1::dataOnly:7101:node1NSD3THDD15:pool3 sdq:gpfs-node1::dataOnly:7101:node1NSD3THDD16:pool3 sdr:gpfs-node1::dataOnly:7101:node1NSD3THDD17:pool3 sds:gpfs-node1::dataOnly:7101:node1NSD3THDD18:pool3 sdt:gpfs-node1::dataOnly:7101:node1NSD3THDD19:pool4 sdu:gpfs-node1::dataOnly:7101:node1NSD3THDD20:pool4 sdv:gpfs-node1::dataOnly:7101:node1NSD3THDD21:pool4 sdw:gpfs-node1::dataOnly:7101:node1NSD3THDD22:pool4 sdb:gpfs-node2::dataAndMetadata:7201:node2NSD3THDD1:: sdc:gpfs-node2::dataAndMetadata:7201:node2NSD3THDD2:: sdd:gpfs-node2::dataAndMetadata:7201:node2NSD3THDD3:: sde:gpfs-node2::dataAndMetadata:7201:node2NSD3THDD4:: sdf:gpfs-node2::dataAndMetadata:7201:node2NSD3THDD5:: sdg:gpfs-node2::dataAndMetadata:7201:node2NSD3THDD6:: sdh:gpfs-node2::dataOnly:7201:node2NSD3THDD7:pool1 sdi:gpfs-node2::dataOnly:7201:node2NSD3THDD8:pool1 sdj:gpfs-node2::dataOnly:7201:node2NSD3THDD9:pool1 sdk:gpfs-node2::dataOnly:7201:node2NSD3THDD10:pool1 sdl:gpfs-node2::dataOnly:7201:node2NSD3THDD11:pool2 sdm:gpfs-node2::dataOnly:7201:node2NSD3THDD12:pool2 sdn:gpfs-node2::dataOnly:7201:node2NSD3THDD13:pool2 sdo:gpfs-node2::dataOnly:7201:node2NSD3THDD14:pool2 sdp:gpfs-node2::dataOnly:7201:node2NSD3THDD15:pool3 sdq:gpfs-node2::dataOnly:7201:node2NSD3THDD16:pool3 sdr:gpfs-node2::dataOnly:7201:node2NSD3THDD17:pool3 sds:gpfs-node2::dataOnly:7201:node2NSD3THDD18:pool3 sdt:gpfs-node2::dataOnly:7201:node2NSD3THDD19:pool4 sdu:gpfs-node2::dataOnly:7201:node2NSD3THDD20:pool4 sdv:gpfs-node2::dataOnly:7201:node2NSD3THDD21:pool4 sdw:gpfs-node2::dataOnly:7201:node2NSD3THDD22:pool4 sdb:gpfs-node3::dataAndMetadata:7301:node3NSD3THDD1:: sdc:gpfs-node3::dataAndMetadata:7301:node3NSD3THDD2:: sdd:gpfs-node3::dataAndMetadata:7301:node3NSD3THDD3:: sde:gpfs-node3::dataAndMetadata:7301:node3NSD3THDD4:: sdf:gpfs-node3::dataAndMetadata:7301:node3NSD3THDD5:: sdg:gpfs-node3::dataAndMetadata:7301:node3NSD3THDD6:: sdh:gpfs-node3::dataOnly:7301:node3NSD3THDD7:pool1 sdi:gpfs-node3::dataOnly:7301:node3NSD3THDD8:pool1 sdj:gpfs-node3::dataOnly:7301:node3NSD3THDD9:pool1 sdk:gpfs-node3::dataOnly:7301:node3NSD3THDD10:pool1 sdl:gpfs-node3::dataOnly:7301:node3NSD3THDD11:pool2 sdm:gpfs-node3::dataOnly:7301:node3NSD3THDD12:pool2 sdn:gpfs-node3::dataOnly:7301:node3NSD3THDD13:pool2 sdo:gpfs-node3::dataOnly:7301:node3NSD3THDD14:pool2 sdp:gpfs-node3::dataOnly:7301:node3NSD3THDD15:pool3 sdq:gpfs-node3::dataOnly:7301:node3NSD3THDD16:pool3 sdr:gpfs-node3::dataOnly:7301:node3NSD3THDD17:pool3 sds:gpfs-node3::dataOnly:7301:node3NSD3THDD18:pool3 sdt:gpfs-node3::dataOnly:7301:node3NSD3THDD19:pool4 sdu:gpfs-node3::dataOnly:7301:node3NSD3THDD20:pool4 sdv:gpfs-node3::dataOnly:7301:node3NSD3THDD21:pool4 sdw:gpfs-node3::dataOnly:7301:node3NSD3THDD22:pool4

System pool bigger then other pools because it using also for metadata. In a case of exhausted system pool file system stop to write anything.

Create NSD:

mmcrnsd -F NSD_creation

4. Configuration and startup.

Starting GPFS. (form one of nodes)

mmstartup -a

To check.

mmgetstate -a

Node number Node name GPFS state

------------------------------------------

1 gpfs-node1 active

2 gpfs-node2 active

3 gpfs-node3 active

File system creation, file placement policies , acl NFSv4 access lists and snapshots

Configuration must be carried out on one of nodes.

1. Creation of file system

mmcrfs -T /gpfsst /dev/gpfsst0 -F /root/nsd_creation -D nfs4 -k nfs4 -A yes -B 128K -Q no -v yes -r 3 -R 3 -m 3 -M 3

2. Placement polices

For ballancing between system and pools 1-4 was used RAND funcation. Function RAND has nonlinear distribution in difference from C-function random()

The results were received by test below.

Writing of 1000 files were used for testing of placement distribution. All values were found by several iterations

Attention: RAND policy doesn't work with windows server/client. All data will be stored to default pool

Testing policy below.

mmlspolicy gpfsst -L RULE 'LIST_1' LIST 'allfiles' FROM POOL 'system' RULE 'LIST_2' LIST 'allfiles' FROM POOL 'pool1' RULE 'LIST_3' LIST 'allfiles' FROM POOL 'pool2' RULE 'LIST_4' LIST 'allfiles' FROM POOL 'pool3' RULE 'LIST_5' LIST 'allfiles' FROM POOL 'pool4' RULE 'to_pool1' SET POOL 'pool1' LIMIT(99) WHERE INTEGER(RAND()*100)<21 RULE 'to_pool2' SET POOL 'pool2' LIMIT(99) WHERE INTEGER(RAND()*100)<26 RULE 'to_pool3' SET POOL 'pool3' LIMIT(99) WHERE INTEGER(RAND()*100)<34 RULE 'to_pool4' SET POOL 'pool4' LIMIT(99) WHERE INTEGER(RAND()*100)<50 RULE DEFAULT SET POOL 'system'

Testing policies

mmchpolicy gpfsst /root/policy -I test

Installing policies

mmchpolicy gpfsst /root/policy -I yes

Checking policies

mmlspolicy gpfsst -L

3. ACL NFSv4

Use mmeditacl to edit ACL, mmgetacl to view;

mmgetacl /gpfsst/eng/ #NFSv4 ACL #owner:root #group:root #ACL flags: # DACL_PRESENT # DACL_AUTO_INHERITED special:owner@:rwxc:allow (X)READ/LIST (X)WRITE/CREATE (X)MKDIR (X)SYNCHRONIZE (X)READ_ACL (X)READ_ATTR (X)READ_NAMED (-)DELETE (X)DELETE_CHILD (X)CHOWN (X)EXEC/SEARCH (X)WRITE_ACL (X)WRITE_ATTR (X)WRITE_NAMED

special:group@:r-x-:allow (X)READ/LIST (-)WRITE/CREATE (-)MKDIR (X)SYNCHRONIZE (X)READ_ACL (X)READ_ATTR (X)READ_NAMED (-)DELETE (-)DELETE_CHILD (-)CHOWN (X)EXEC/SEARCH (-)WRITE_ACL (-)WRITE_ATTR (-)WRITE_NAMED

group:EXAMPLE\dg_engineer:rwxc:allow:FileInherit:DirInherit (X)READ/LIST (X)WRITE/CREATE (X)MKDIR (X)SYNCHRONIZE (X)READ_ACL (X)READ_ATTR (X)READ_NAMED (X)DELETE (X)DELETE_CHILD (X)CHOWN (X)EXEC/SEARCH (X)WRITE_ACL (X)WRITE_ATTR (X)WRITE_NAMED

4. Snapshots

The script below will be create snapshot and delete snapshot with 30 days age or older. Script is stating every day with cron on gpfs-node1.

cat /scripts/gpfs_snap_cr #!/bin/sh num=`/bin/date "+%Y%m%d"` nums=`/bin/date "+%s"` numms=`expr $nums - 2592000` numm=`/bin/date --date=@$numms "+%Y%m%d"` /usr/lpp/mmfs/bin/mmcrsnapshot gpfsst gpfsst$num /usr/lpp/mmfs/bin/mmdelsnapshot gpfssy gpfsst$numm

Installing of HA smb service.

For two nodes gpfs-node1 and gpfs-node2.

yum install nfs-utils rpm -ivh /usr/lpp/mmfs/4.2.0.0/ganesha_rpms/*.rpm rpm -ivh /usr/lpp/mmfs/4.2.0.0/smb_rpms/*.rpm

update

rpm -Uvh /usr/lpp/mmfs/4.2.0.2/ganesha_rpms/*.rpm rpm -Uvh /usr/lpp/mmfs/4.2.0.2/smb_rpms/*.rpm

Configuring of HA smb service.

Configuration must be carried out on one of nodes.

1. Create directory on gpfs storage

mkdir -p /gpfsst/ces

2. Setting up Cluster Export Services shared root file system

mmchconfig cesSharedRoot=/gpfsst/ces

3. Configuring Cluster Export Services nodes

mmchnode --ces-enable -N gpfs-node1,gpfs-node2

After configuring all nodes, verify that the list of CES nodes is complete:

mmces node list

4. Preparing to perform service actions on the CES shared root directory file system

To create such a node class, named protocol, issue the following command:

mmcrnodeclass protocol -N gpfs-node1,gpfs-node2

Suspend all protocol nodes:

mmces node suspend -N gpfs-node1,gpfs-node2

Stop Protocol services on all protocol nodes:

mmces service stop NFS -a mmces service stop SMB -a mmces service stop OBJ -a

Verify that all protocol services have been stopped:

mmces service list -a

Shut down GPFS on all protocol nodes:

mmshutdown -N protocol

Protocol nodes are now ready for service actions to be performed on CES shared root directory or the nodes themselves. To recover from a service action start up GPFS on all protocol nodes:

mmstartup -N protocol

Make sure that the CES shared root directory file system is mounted on all protocol nodes:

mmmount cesSharedRoot -N protocol

Resume all protocol nodes:

mmces node resume -N gpfs-node1,gpfs-node2

Start protocol services on all protocol nodes:

mmces service start SMB -a mmces service enable SMB

Verify that all protocol services have been started:

mmces service list -a

5. Creating shared IP

mmces address add --ces-node gpfs-node1 --ces-ip 192.168.5.184

Verify:

mmces address list

6. Adding to AD

Create DNS records before.

mmuserauth service create --type ad --data-access-method file --netbios-name gpfs-share \ --user-name admin@example.com --idmap-role master --servers 192.168.4.9 --password Password \ --idmap-range-size 1000000 --idmap-range 10000000-299999999 --unixmap-domains 'EXAMPLE(5000-20000)'

7. Export directory

Creating directory

mkdir -p /gpfsst/accounting

Set NFSv4 ACL

export EDITOR=/usr/bin/vi mmeditacl /gpfsst/accounting

#NFSv4 ACL #owner:root #group:root #ACL flags: # DACL_PRESENT # DACL_AUTO_INHERITED special:owner@:rwxc:allow (X)READ/LIST (X)WRITE/CREATE (X)MKDIR (X)SYNCHRONIZE (X)READ_ACL (X)READ_ATTR (X)READ_NAMED (-)DELETE (X)DELETE_CHILD (X)CHOWN (X)EXEC/SEARCH (X)WRITE_ACL (X)WRITE_ATTR (X)WRITE_NAMED

group:EXAMPLE\dg_accounting:rwxc:allow:FileInherit:DirInherit (X)READ/LIST (X)WRITE/CREATE (X)MKDIR (X)SYNCHRONIZE (X)READ_ACL (X)READ_ATTR (X)READ_NAMED (X)DELETE (X)DELETE_CHILD (X)CHOWN (X)EXEC/SEARCH (X)WRITE_ACL (X)WRITE_ATTR (X)WRITE_NAMED

Creating shared smb:

mmsmb export add accounting "/gpfsst/accounting"

Adding options:

mmsmb export change accounting --option "browseable=yes"

Verify:

mmsmb export list

export path guest ok smb encrypt

thematic_garbage /gpfsst/accounting no auto

Information:

The following options are not displayed because they do not contain a value:

"browseable"

Installing GUI.

1. Installing sensors on every of nodes

yum install boost-devel boost-regexp nc -y

cd /usr/lpp/mmfs/4.2.0.2/zimon_rpms

rpm -ivh gpfs.gss.pmsensors-4.2.0*.rpm

2. Installing collectors on one or mode nodes

cd /usr/lpp/mmfs/4.2.0.2/zimon_rpms

rpm -ivh gpfs.gss.pmcollector-4.2.0-*.rpm

3. Installing GUI on one or mode nodes

yum install postgresql-server cd /usr/lpp/mmfs/4.2.0.2/gpfs_rpms rpm -ivh gpfs.gui-4.2.0-2.el7.*.rpm

4. Configuring and staring collectors on one or more nodes

mmperfmon config generate --collectors gpfs-node1 systemctl start pmcollector systemctl enable pmcollector

5. Configuring and staring sensors from one node (gpfs-node1)

Enable the sensors on the cluster by using the mmchmode command. Issuing this command configures and starts the performance tool sensors on the nodes.

mmchnode --perfmon -N gpfs-node1,gpfs-node2,gpfs-node3

For every nodes

systemctl start pmsensors systemctl enable pmsensors

6. Staring GUI on one node (gpfs-node1)

systemctl gpfsgui start systemctl gpfsgui enable

7. Connecting

Open with browser https://gpfs-node1.example.com.

The default user name and password to access the IBM Spectrum Scale management GUI is admin and admin001 respectively.

Conclusion.

Enjoy!

About author

Profile of the author