Table of Contents

Ceph VS Postworx as storage for kubernetes

Introduction.

- Portworx - storage for Kubernetes. Trial automatically provided on 31 days.

Portworx support RWO and RWX volumes. Support snapshots. Snapshots can be stored locally and in S3. Volumes and snapshots creating/deleting are integrated with Kubernetes.

- Ceph- most popular storage for Kubernetes. Open-source.

Ceph rbd support RWO volumes and cephfs support RWX volumes. Support snapshots. Snapshots can be exported as a file. Snapshot creating/deleting and RWX volumes are not integrated with kubernetes. These actions must be done natively with ceph/rbd utilities.

Resources

- 3VM on Ovirt Virtualization. All VM on the same host.

- VM - 6vCPU, 28 GB MEM, 100 GB system disk, 200 GB disk for Portworx, 200 GB disk for Ceph, 10 Gbit/s network.

- OS - Centos7, kernel - 3.10.0-957.21.3.el7.x86_64.

- kubespray Kubernetes deployment.

Deployment phase

Portworx was deployed with Helm by using following doc:

Ceph was deployed with Ansible as a docker on kubernetes hosts:

CSI was not tested. For cephfs it is not ready for production use (https://github.com/ceph/ceph-csi).

Software versions:

- portworx - 2.0.3.6

- ceph - nautilus - 14.2.1

In both cases additional manipulations not included in docs above were required: edit helm chart for portworx, install ceph-common on VMs for ceph, add rbd-provisioner, rbac rules and storage class.

Usability

Working with CephFS snapshot is not so convenient 'cause it requires to work with mounted file system.

Both systems can be integrated with Prometheus.

Performance

Postgresql/pgbench tool was used to test performance of storages. Used service,pod,pvc/pv yamls are here.

All tests were started on external server: 24 threads, 24 GB MEM.

Prometheus was installed on separate VM.

The following pgbench tests were used:

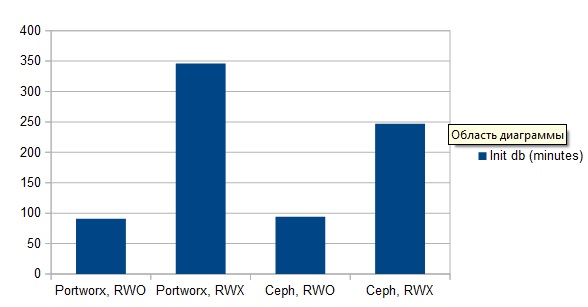

Init db:

time pgbench -h IP -p PORT -U test -i -s 5000 test

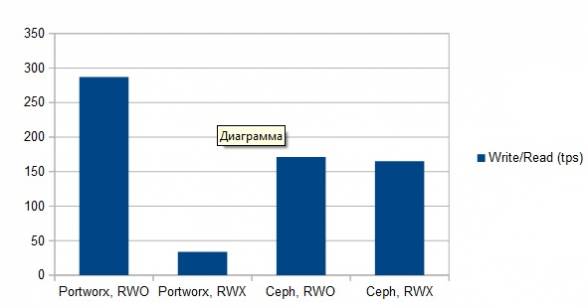

Read/Write:

pgbench -h IP -p PORT -U test -c 10 -j 10 -n -T 600 test

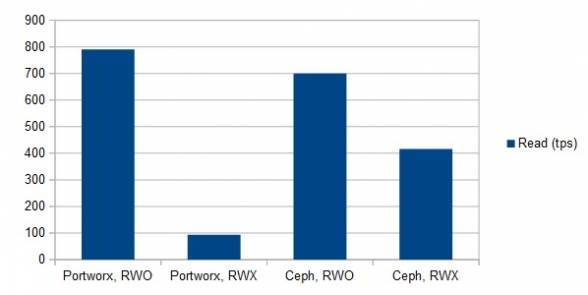

Read:

pgbench -h IP -p PORT -U test -c 10 -j 10 -n -S -T 600 test

Stability

Portworx is using etcd. To avoid running out of space for writes to the keyspace, the etcd keyspace history must be compacted and quote must be exceeded. Portworx and Ceph required to be recovered manually during numerous VM PowerOff and Shutdown. In this point the both system are similar.

Conclusion

Ceph has following advantages - Open Source licences, widespread use, numerous information in Internet. Working with Cephfs snapshots is not convenient.

Portworx has better performance of RWO volumes. But Portworx RWX volumes performance is very poor and can't be used for load services and applications.

Both system can be monitored by Prometheus.

About author

Profile of the author