Tasks:

- NFS Share storage for WmWare/Citrix based on Freebsd and ZFS

- Fault-tolerant NFS share storage for VmWare by using GLUSTERFS

- Lustre File system

- Lustre File system over ZFS

- General Parallel File System (GPFS)

- Spectrum Scale v4.2 (GPFS) with HA SMB

- Deploying ScaleIO 1.3

- Ftps server configuration on the Centos Linux and vsftpd

- Fault-tolerant, multy protocols proxy service

- Encoding problems with using mail linux utility to send reports

- Configuring BGP on Cisco router and Juniper SRX

- Configuring PAT on Juniper SRX with 2 ISP connections for 2 DNS servers in DMZ

- Configuring NAT and fault tolerance switching between two ISPs on Juniper SRX 220H and Cisco 1941

- VPN GRE over IPSEC between Freebsd,Linux,Cisco,Checkpoint

- VPN GRE over IPSEC between Juniper SRX and Cisco

- Configuring VPN IPSEC between Juniper SRX and Google Cloud Platform (GCP)

- Using shell for automation tasks on Juniper SRX cluster

- Installing of HA Opennebula on Centos 7 with Ceph as a datastore and IPoIB network

- VDI, Looking forward with ovirt 3.6 features of rbd support

- Configuring HA NFS export of home directories stored on ceph file storage and mounting on clients with automount within IPA infrastructure

- Configuring of server iSER - iSCSI Extensions for RDMA on linux Centos 6 and vSphere 6 as iSER client

- Easy install ovirt engine 4 with Docker

- Fit CloudFoundry to install on GCP free trial

- Import qcow2 image to Ovirt/RedHat Virtualization

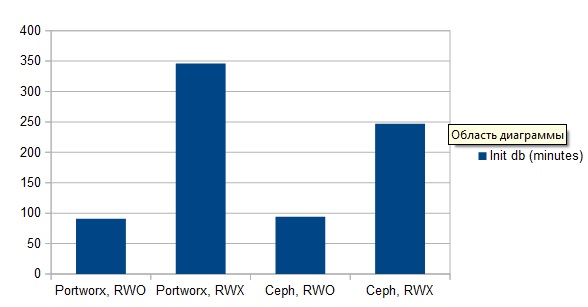

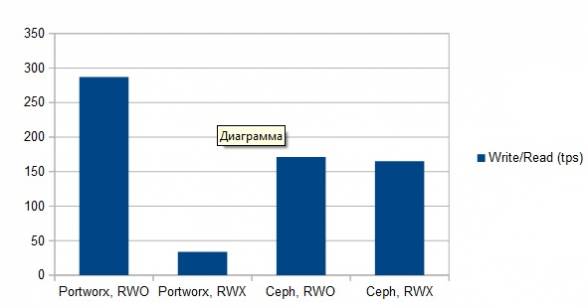

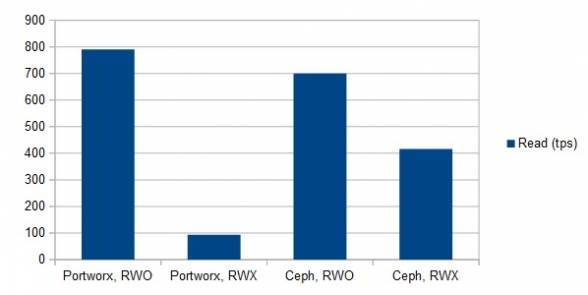

- Ceph VS Portworx