Task:

* Deploy file cluster in volume 300 Тb

* Provide possibility of expansion of volume

* Provide ability of fast seacrh of files

* Provide ability write/read with 1Gbit/s for 10 clients (hosts)

* Provide access for linux/windows hosts

* Average file size - 50 MB

* Budget - 53 000$

Solution - Lustre

Introduction.

Lustre is a distributed file system with separate storing of data and meta-data.

ext3 file system is used for storing of data and meta-data directly on hosts.

In this articale: ZFS based Lustre solution is zfs file system with associated ability used (we will not consider it).

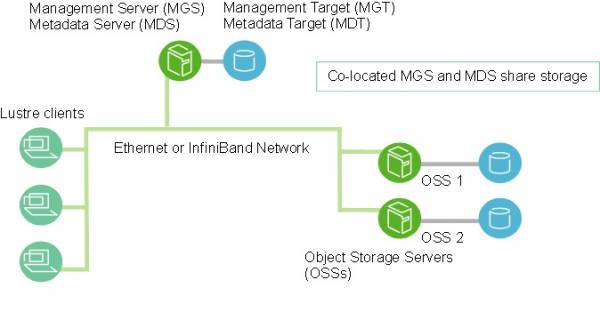

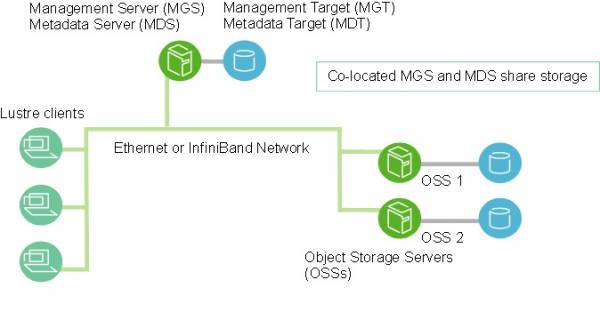

Lustre consists of following components:

Storing information about all file systems in the cluster. (Cluster can have only one MGS).

Exchanging the above information with all components.

Providing functioning with meta-data, that are stored on one or more Metadata Targets - MDT (One MDS is the restriction of one file system).

Providing functioning with data, that are stored on one or more Object Storage Targets - OST.

Network module that provides exchanging of information in a cluster. Can use under Ethernet or InfiniBand

Nodes with deployed software Lustre client, that provide access to cluster file systems.

Below is the scheme from official documentation.

Choosing hardware*.

* All following will concern

MGS/MDS/OSS - CentOS 6.2 x64 + Lustre Whamcloud 2.1.2

Lustre Client - CentOS 5.8 x64 + Lustre Whamcloud 1.8.8

Memory leak problem with clients CentOS 6.2 + Lustre Whamcloud 2.1.2 forced us to use different version on clients and servers.

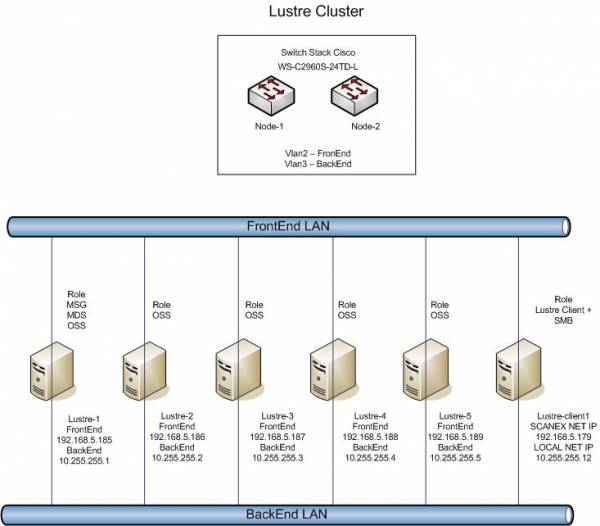

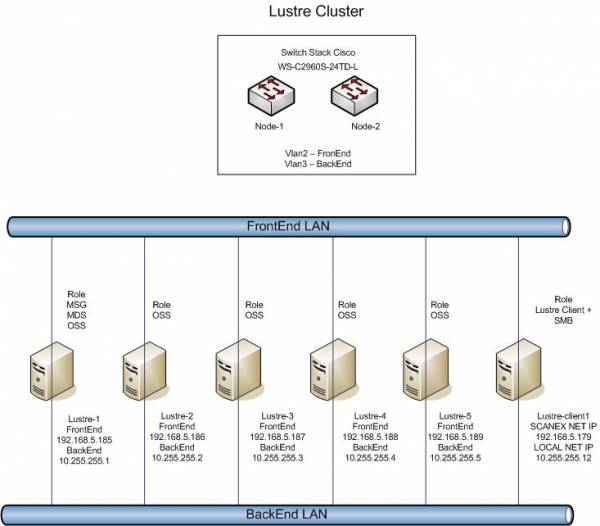

Taking into account the budget restrictions we decided to use the following scheme:

One node has functions - MGS,MDS,OSS.

Four nodes are - OSS.

Memory requirements :

Memory for every of MGS,MDS,OSS,Lustre clients should be more than 4 GB. Cache is set on all nodes on default. That must be taken into account.

Cache of Lustre client can fill 3/4 of available memory space.

CPU requirements:

Lustre is enough productive system that can operate on slow CPUs. For example, 4-core Xeon 3000 will be enough. But for minimizing possible delays we have decided to use Intel Xeon E5620.

For nodes MGS/MDS/OSS - 48Gb

For nodes OSS - 24Gb

For client nodes - 24Gb. This value was chosen for extended functionality of clients. As a fact 8 Gb was enough. We faced serious delays on a system with 4Gb memory.

MDT HDD requirement:

Store Volume 300TB/File size average 50MB*2K (Inode size) = 12.228 Gb.

For providing high speed I/O for meta-data we decided to use SSD.

Network requirement:

For providing requirement characteristics with account of budget restriction we decided to use Ethernet for BackEnd-network.

Working Scheme and hardware configuration.

Configuration of MGS/MDS/OSS server:

| Type | Model | Quantity |

| Chassis | Supermicro SC846TQ-R900B | 1 |

| MotherBoard | Supermicro X8DTN+-F | 1 |

| Memory | KVR1333D3E9SK3/12G | 2 |

| CPU | Intel® Xeon® Processor E5620 | 2 |

| RAID controller | Adaptec RAID 52445 | 1 |

| Cable for RAID | Cable SAS SFF8087 - 4*SATA MS36-4SATA-100 | 6 |

| HDD | Seagate ST3000DM001 | 24 |

| Ethernet card 4-ports | Intel <E1G44HT> Gigabit Adapter Quad Port (OEM) PCI-E 4×10/100/1000Mbps | 1 |

| SSD | 40 Gb SATA-II 300 Intel 320 Series <SSDSA2CT040G3K5> | 2 |

| SSD | 120 Gb SATA 6Gb / s Intel 520 Series < SSDSC2CW120A310 / 01> 2.5“ MLC | 2 |

Configuration of OSS server:

| Type | Model | Quantity |

| Chassis | Supermicro SC846TQ-R900B | 1 |

| MotherBoard | Supermicro X8DTN+-F | 1 |

| Memory | KVR1333D3E9SK3/12G | 2 |

| CPU | Intel® Xeon® Processor E5620 | 2 |

| RAID controller | Adaptec RAID 52445 | 1 |

| Cable for RAID | Cable SAS SFF8087 - 4*SATA MS36-4SATA-100 | 6 |

| HDD | Seagate ST3000DM001 | 24 |

| Ethernet card 4-ports | Intel <E1G44HT> Gigabit Adapter Quad Port (OEM) PCI-E 4×10/100/1000Mbps | 1 |

| SSD | 40 Gb SATA-II 300 Intel 320 Series <SSDSA2CT040G3K5> | 2 |

Configuration of Lustre-lient server:*

*this configuration was in our storehouse

| Type | Model | Quantity |

| Server | HP DL160R06 E5520 DL160 G6 E5620 2.40ГГц, 8ГБ (590161-421) | 1 |

| CPU | Intel Xeon E5620 (2,40 ГГц/4-ядерный/80 Вт/12 МБ) для HP DL160 G6 (589711-B21) | 1 |

| Memeory | MEM 4GB (1x4Gb 2Rank) 2Rx4 PC3-10600R-9 Registered DIMM (500658-B21) | 4 |

| HDD | HDD 450GB 15k 6G LFF SAS 3.5” HotPlug Dual Port Universal Hard Drive (516816-B21) | 2 |

Network Switch:

| Type | Model | Quantity |

| Switch | Сisco WS-C2960S-24TS-L | 2 |

| Stack module | Cisco Catalyst 2960S FlexStack Stack Module optional for LAN Base [C2960S-STACK] | 2 |

Also we included HP RACK 36U, APC 5000VA and KVM switch.

OS preparing and tuning

The question is where SSD is plugged if Chassis have only 24 hot-swaps. The answer is that they are connected to motherboard and put into the server (there was free space).

Our production restriction for this solution allows to power off hardware for 10 min. If your production restrictions are higher you should use only HOT-SWAP. Also if your production restrictions include 24/7 you should use fault-tolerant solutions.

Create two RAID5 volume on a RAID. Each volume consist of 12 HDD.

Create software RAID1 md0 using SSD 40Gb with mdadm.

Create software RAID1 md1 using SSD 120Gb on MGS/MDS.

Create two bond on each of MGS/MDS/

OSS servers:

bond0 (2 ports ) - FrontEnd

bond1 (4 ports ) - BackEnd

BONDING_OPTS=“miimon=100 mode=6”

Disable SELINUX

Deploy follow software:

yum install mc openssh-clients openssh-server net-snmp man sysstat rsync htop trafshow nslookup ntp

Configure ntp

Create same on all servers (uid:gid)

Tune TCP parameters with sysctl.conf

# increase Linux TCP buffer limits\\

net.core.rmem_max = 8388608\\

net.core.wmem_max = 8388608\\

# increase default and maximum Linux TCP buffer sizes\\

net.ipv4.tcp_rmem = 4096 262144 8388608\\

net.ipv4.tcp_wmem = 4096 262144 8388608\\

# increase max backlog to avoid dropped packets\\

net.core.netdev_max_backlog=2500\\

net.ipv4.tcp_mem=8388608 8388608 8388608\\

sysctl net.ipv4.tcp_ecn=0

Installing Lustre

On a servers:

Download utilities' distributives :

http://downloads.whamcloud.com/public/e2fsprogs/1.42.3.wc1/el6/RPMS/x86_64/

and lustre:

http://downloads.whamcloud.com/public/lustre/lustre-2.1.2/el6/server/RPMS/x86_64/

Install utilities:

rpm -e e2fsprogs-1.41.12-11.el6.x86_64

rpm -e e2fsprogs-libs-1.41.12-11.el6.x86_64

rpm -Uvh e2fsprogs-libs-1.42.3.wc1-7.el6.x86_64.rpm

rpm -Uvh e2fsprogs-1.42.3.wc1-7.el6.x86_64.rpm

rpm -Uvh libss-1.42.3.wc1-7.el6.x86_64.rpm

rpm -Uvh libcom_err-1.42.3.wc1-7.el6.x86_64.rpm

Install Lustre:

rpm -ivh kernel-firmware-2.6.32-220.el6_lustre.g4554b65.x86_64.rpm

rpm -ivh kernel-2.6.32-220.el6_lustre.g4554b65.x86_64.rpm

rpm -ivh lustre-ldiskfs-3.3.0-2.6.32_220.el6_lustre.g4554b65.x86_64.x86_64.rpm

rpm -ivh perf-2.6.32-220.el6_lustre.g4554b65.x86_64.rpm

rpm -ivh lustre-modules-2.1.1-2.6.32_220.el6_lustre.g4554b65.x86_64.x86_64.rpm

rpm -ivh lustre-2.1.1-2.6.32_220.el6_lustre.g4554b65.x86_64.x86_64.rpm

Check /boot/grub/grub.conf for default boot of lustre kernel

Configure network LNET:

echo “options lnet networks=tcp0(bond1)” > /etc/modprobe.d/lustre.conf

reboot

On a clients:

Update kernel:

yum update kernel-2.6.18-308.4.1.el5.x86_64

reboot

Download utilities distributives:

http://downloads.whamcloud.com/public/e2fsprogs/1.41.90.wc4/el5/x86_64/

and lustre:

http://downloads.whamcloud.com/public/lustre/lustre-1.8.8-wc1/el5/client/RPMS/x86_64/

Install utilities:

rpm -Uvh –nodeps e2fsprogs-1.41.90.wc4-0redhat.x86_64.rpm

rpm -ivh uuidd-1.41.90.wc4-0redhat.x86_64.rpm

Install Lustre:

rpm -ivh lustre-client-modules-1.8.8-wc1_2.6.18_308.4.1.el5_gbc88c4c.x86_64.rpm

rpm -ivh lustre-client-1.8.8-wc1_2.6.18_308.4.1.el5_gbc88c4c.x86_64.rpm

Configure network LNET:

echo “options lnet networks=tcp0(eth1)” > /etc/modprobe.d/lustre.conf

reboot

Deploying Lustre

On a server MGS/MDS/OSS:

mkfs.lustre –fsname=FS –mgs –mdt –index=0 /dev/md1 (/dev/md1 is software RAID1)

mkdir /mdt

mount -t lustre /dev/md1 /mdt

echo “/dev/md1 /mdt lustre defaults,_netdev 0 0” » /etc/fstab

mkfs.lustre –fsname=FS –mgsnode=10.255.255.1@tcp0 –ost –index=0 /dev/sda (Где /dev/sda - том RAID5)

mkfs.lustre –fsname=FS –mgsnode=10.255.255.1@tcp0 –ost –index=1 /dev/sdb (Где /dev/sdb - том RAID5)

mkdir /ost0

mkdir /ost1

mount -t lustre /dev/sda /ost0

mount -t lustre /dev/sdb /ost1

echo “/dev/sda /ost0 lustre defaults,_netdev 0 0” » /etc/fstab

echo “/dev/sdb /ost1 lustre defaults,_netdev 0 0” » /etc/fstab

mkdir /FS

mount -t lustre /FS

echo “10.255.255.1@tcp0:/temp /FS lustre defaults,_netdev 0 0” » /etc/fstab

On a servers OSS:

mkfs.lustre –fsname=FS –mgsnode=10.255.255.1@tcp0 –ost –index=N /dev/sda (Где N-номер узла, /dev/sda - том RAID5)

mkfs.lustre –fsname=FS –mgsnode=10.255.255.1@tcp0 –ost –index=N+1 /dev/sdb (Где N-номер узла, /dev/sdb - том RAID5)

mkdir /ostN

mkdir /ostN+1

mount -t lustre /dev/sda /ostN

mount -t lustre /dev/sdb /ostN+1

echo “/dev/sda /ostN lustre defaults,_netdev 0 0” » /etc/fstab

echo “/dev/sdb /ostN+1 lustre defaults,_netdev 0 0” » /etc/fstab

mkdir /FS

mount -t lustre /FS

echo “10.255.255.1@tcp0:/temp /FS lustre defaults,_netdev 0 0” » /etc/fstab

Also you can release 5% of space on each OST without of service turnof

tune2fs -m 0 /dev/sda

On a clients:

mkdir /FS

mount -t lustre /FS

echo “10.255.255.1@tcp0:/temp /FS lustre defaults,_netdev 0 0” » /etc/fstab

Now you can display the system state:

lfs df -h

FS-MDT0000_UUID 83.8G 2.2G 76.1G 3% /FS[MDT:0]

FS-OST0000_UUID 30.0T 28.6T 1.4T 95% /FS[OST:0]

FS-OST0001_UUID 30.0T 28.7T 1.3T 96% /FS[OST:1]

FS-OST0002_UUID 30.0T 28.6T 1.3T 96% /FS[OST:2]

FS-OST0003_UUID 30.0T 28.7T 1.3T 96% /FS[OST:3]

FS-OST0004_UUID 30.0T 28.3T 1.7T 94% /FS[OST:4]

FS-OST0005_UUID 30.0T 28.2T 1.8T 94% /FS[OST:5]

FS-OST0006_UUID 30.0T 28.3T 1.7T 94% /FS[OST:6]

FS-OST0007_UUID 30.0T 28.2T 1.7T 94% /FS[OST:7]

FS-OST0008_UUID 30.0T 28.3T 1.7T 94% /FS[OST:8]

FS-OST0009_UUID 30.0T 28.2T 1.8T 94% /FS[OST:9]

Working with Lustre

The section is in detail reflected in official manual Manual

we staying only on two tasks:

1. Rebalancing of data on OST if new node was added

Exapmle:

FS-MDT0000_UUID 83.8G 2.2G 76.1G 3% /FS[MDT:0]

FS-OST0000_UUID 30.0T 28.6T 1.4T 95% /FS[OST:0]

FS-OST0001_UUID 30.0T 28.7T 1.3T 96% /FS[OST:1]

FS-OST0002_UUID 30.0T 28.6T 1.3T 96% /FS[OST:2]

FS-OST0003_UUID 30.0T 28.7T 1.3T 96% /FS[OST:3]

FS-OST0004_UUID 30.0T 28.3T 1.7T 94% /FS[OST:4]

FS-OST0005_UUID 30.0T 28.2T 1.8T 94% /FS[OST:5]

FS-OST0006_UUID 30.0T 28.3T 1.7T 94% /FS[OST:6]

FS-OST0007_UUID 30.0T 28.2T 1.7T 94% /FS[OST:7]

FS-OST0008_UUID 30.0T 28.3T 1.7T 94% /FS[OST:8]

FS-OST0009_UUID 30.0T 28.2T 1.8T 94% /FS[OST:9]

FS-OST000a_UUID 30.0T 2.1T 27.9T 7% /FS[OST:10]

FS-OST000b_UUID 30.0T 2.2T 27.8T 7% /FS[OST:11]

There could be two problems:

1.1 Adding new data problem associated with lack of free space just on one of OST

1.2 Increasing of I/O load on a new node.

You should use following algorithm for solving this problems:

Exapmle:

lctl –device N deactivate

lfs find –ost {OST_UUID} -size +1G | lfs_migrate -y

lctl –device N activate

2.Backup

I will need to stop the writing data with deativation and backup after.

Or you will need to use LVM2 snapshot, but production will down.

Afterword

Now I am recommending to use Lustre 1.88wc4 with OS Centos 5.8, as a stable.

Special thanks Whamcloud Manual for exhaustive documentation.

About author