This is an old revision of the document!

Deploying ScaleIO 1.3

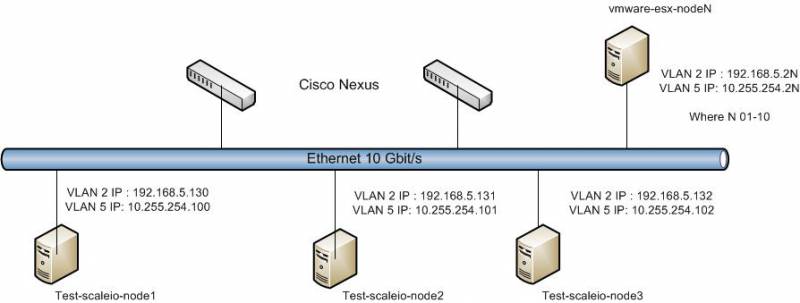

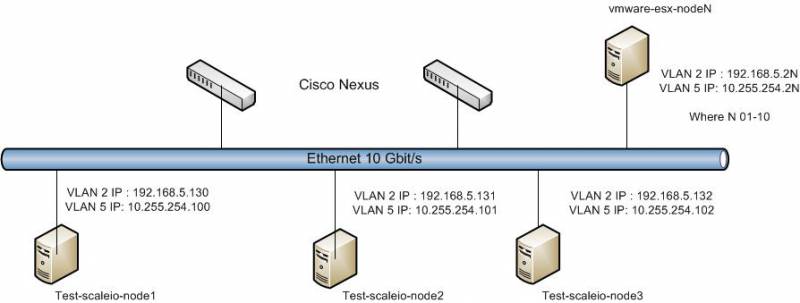

This article is exploring the process of creation block storage based on ScaleIO 1.3. ScaleIO was deployed on three Linux CentOS servers with 14 local disks and several VmWare 5.5 hosts as a clients. All hosts connected with Ethernet 10Gbit/s.

Introduction.

EMC ScaleIO is a software-defined storage product from EMC Corporation that creates a server-based storage area network (SAN) from local application server storage, converting direct-attached storage into shared block storage. It uses existing host-based internal storage to create a scalable, high-performance, low-cost server SAN. EMC promotes its ScaleIO server storage-area network software as a way to converge computing resources and commodity storage into a “single-layer architecture.”

ScaleIO can scale from three compute/storage nodes to over 1,000 nodes that can drive up to 240 million IOPS of performance. Developers can deploy the ScaleIO software on-prem commodity infrastructure or in the cloud and then port their applications back into a production ScaleIO instance.

Al last EMC DAY ROADSHOW 2016 in Moscow was announced that ScaleIO will be OpenSource*.

Scheme and Equipments.

Scheme

LACP bonding is used for connecting ScaleIO nodes to Ethernet switches. Ethernet switches configured with vPC.

Equipments

ScaleIO nodes:

Proc - Intel Xeon E5520/5620

Memory - 16 GB

OS DISK - 2X40GB (RAID1)

DISK - 14x4TB

Configuration

Shortly about initial configuration of CentOS on test-scaleio-nodes

1. Configure UDEV rules for each of disks. For example create links in /dev as 1-hdd,2-hdd,..,14-hdd. Without links in a case of faults of one or more hdd disks and subsequent reboot sds devices will be associated with inappropriate HDDs.

2. All ethernet ports in bond (mode 4). VLAN2 - management, VLAN5 - data

3. Passwordless access between nodes for user root. The key shoud be created on one node and then copy to other to /root/.ssh/.

ssh-keygen -t dsa (creation of passwordless key)

cd /root/.ssh

cat id_dsa.pub >> authorized_keys

chown root.root authorized_keys

chmod 600 authorized_keys

echo "StrictHostKeyChecking no" > config

4. Don't forget to write all names hosts in /etc/hosts.

5. Configure ntpd on all nodes

6. IPTABLES/Firewall

Allow ports sshd and ScaleIO tcp incoming ports 6611,9011,7072. (use official documentation EMC)

7. Disable selinux

8. Configuration of sysctl.conf

net.ipv4.tcp_timestamps=0

net.ipv4.tcp_sack=0

net.core.netdev_max_backlog=250000

net.core.rmem_max=8388608

net.core.wmem_max=8388608

net.core.rmem_default=8388608

net.core.wmem_default=8388608

net.core.optmem_max=8388608

net.ipv4.tcp_mem=8388608 8388608 8388608

net.ipv4.tcp_rmem=4096 87380 8388608

net.ipv4.tcp_wmem=4096 65536 8388608

9. Add max open files to /etc/security/limits.conf (depends on your requirements)

hard nofile 1000000

soft nofile 1000000

ScaleIO installation

1. Downloading

Links for download.

Some words about Linux packages. All packages comes as rpm and in case of using Spacewalk you can create an updating the ScaleIO repo.

Installing on ScaleIO nodes

Nodes test-scaleio-node1-2 will be MDM and test-scaleio-node3 will be Tie-Breaker.

Installing required packages:

yum install libaio numactl mutt bash-completion python -y

Installing package for MDM nodes:

rpm -ivh /downloads/EMC-ScaleIO-mdm-1.32-403.2.el7.x86_64.rpm

Installing package for Tie-Breaker node:

rpm -ivh /downloads/EMC-ScaleIO-tb-1.32-403.2.el7.x86_64.rpm

Installing packages on all nodes

rpm -ivh /downloads/EMC-ScaleIO-callhome-1.32-403.2.el7.x86_64.rpm

rpm -ivh /downloads/EMC-ScaleIO-sds-1.32-403.2.el7.x86_64.rpm"

Installing on VmWare hosts

esxcli software acceptance set --level=PartnerSupported

esxcli software vib install -d /tmp/sdc-1.32.343.0-esx5.5.zip

reboot

Cluster configuration

1. Starting services

For MDM nodes:

systemctl start mdm.service

systemctl enable mdm.service

For Tie-Breaker node:

systemctl start tb.service

systemctl enable tb.service

For all nodes:

systemctl start sds.service callhome.service

systemctl enable sds.service callhome.service

2. Configuring MDM cluster

All action performing on test-scaleio-node1

Login to first mdm to change password and accept licence:

scli --add_primary_mdm --primary_mdm_ip 10.255.254.100 --accept_license

scli --login --username admin --password admin

scli --set_password --mdm_ip 10.255.254.100 --old_password admin --new_password YourPass

scli --login --username admin --password YourPass #Required to perform any operations.

Add secondary MDM and Tie-Breaker to cluster:

scli --add_secondary_mdm --secondary_mdm_ip 10.255.254.101

scli --add_tb --tb_ip 10.255.254.102

Checking cluster:

scli --query_cluster

Switch to cluster mode:

scli --switch_to_cluster_mode

scli --query_cluster

Now you can also use GUI for sds configuration. Install on your Windows PC EMC ScaleIO GUI.

3. Configuring SDS.

Setup protection domain and storage pool

scli --add_protection_domain --protection_domain_name test

scli --add_storage_pool --protection_domain_name test --storage_pool_name test01

Configuring SDSs and devices:

scli --add_sds --sds_ip 10.255.254.100 --protection_domain_name test --storage_pool_name test01 --device_path /dev/1-hdd,/dev/2-hdd,/dev/3-hdd,/dev/4-hdd,/dev/5-hdd,/dev/6-hdd,/dev/7-hdd,/dev/8-hdd,/dev/9-hdd,/dev/10-hdd,/dev/11-hdd,/dev/12-hdd,/dev/13-hdd,/dev/14-hdd --device_name sds01100,sds02100,sds03100,sds04100,sds05100,sds06100,sds07100,sds08100,sds09100,sds10100,sds11100,sds12100,sds13100,sds14100

scli --add_sds --sds_ip 10.255.254.101 --protection_domain_name test --storage_pool_name test01 --device_path /dev/1-hdd,/dev/2-hdd,/dev/3-hdd,/dev/4-hdd,/dev/5-hdd,/dev/6-hdd,/dev/7-hdd,/dev/8-hdd,/dev/9-hdd,/dev/10-hdd,/dev/11-hdd,/dev/12-hdd,/dev/13-hdd,/dev/14-hdd --device_name ds01101,sds02101,sds03101,sds04101,sds05101,sds06101,sds07101,sds08101,sds09101,sds10101,sds11101,sds12101,sds13101,sds14101

scli --add_sds --sds_ip 10.255.254.102 --protection_domain_name test --storage_pool_name test01 --device_path /dev/1-hdd,/dev/2-hdd,/dev/3-hdd,/dev/4-hdd,/dev/5-hdd,/dev/6-hdd,/dev/7-hdd,/dev/8-hdd,/dev/9-hdd,/dev/10-hdd,/dev/11-hdd,/dev/12-hdd,/dev/13-hdd,/dev/14-hdd --device_name ds01102,sds02102,sds03102,sds04102,sds05102,sds06102,sds07102,sds08102,sds09102,sds10201,sds11102,sds12102,sds13102,sds14102

Setup rebalance and spare policy:

scli --set_rebalance_mode --protection_domain_name test --storage_pool_name test01 --enable_rebalance

scli --set_rebuild_policy --protection_domain_name test --storage_pool_name test01 --policy no_limit

scli --modify_spare_policy --protection_domain_name test --storage_pool_name test01 --spare_percentage 34 --i_am_sure

Spare policy was installed with 34% spare capacity space (for 3 SDS nodes).

Without that you will face with warning - “Configured spare capacity is smaller than largest fault unit”

Also you can setup read cache size:

scli --set_sds_rmcache_size --protection_domain_name test --rmcache_size_mb 2048

4. Creating volume:

scli --add_volume --protection_domain_name test --storage_pool_name test01 --size_gb YOUR_SIZE_IN_MB --volume_name test_vol0

5. Mapping volume to clients (VmWare vSphere hosts):

scli --map_volume_to_sdc --volume_name test_vol0 --sdc_ip 10.255.254.200

Where 10.255.254.200 is IP of first vSphere ESX host.

Configuring VmWare ESX clients

Generate some UUID anywhere. This UUID will be ESX hosts client identification number.

From ESX nodes:

esxcli system module parameters set -m scini -p "IoctlIniGuidStr=12A97DE0-7CED-440C-AF9A-1BF51CAA699F IoctlMdmIPStr=10.255.254.100,10.255.254.101"

esxcli system module load -m scini

reboot (it is not always required)

Now you can perform“Rescan ALL” in configuration → Storage Adapter. And you will see some Fibre Channel device and you will can add storage.

Testing

There are no any test results yet.

Conclusion.

Despite absence of results of tests ScaleIO is very productive and reliable.

But performance suffered if one of nodes has been rebooted . (You can setup re-balance limit to reduce influence to performance) Also we faced with situation when we had to wait for full synchronization to start using volume.

Enjoy!

About author

Links.